Updated: 21.11.2012

There are many tools out there on the market which claim to be enterprise service buses. But only NServiceBus incorporates true service oriented architecture and many years of experience in how to design such systems. Using the matrix analogy from Morpheus we can compare traditional service buses to taking the blue pill. Only if you choose NServiceBus aka the red pill you stay in the wonderland of real service orientation and discover how deep the rabbit-hole goes.

Before we deep dive into NServiceBus there is one simple rule you have to remember, even if it is the only thing you remember after this presentation.

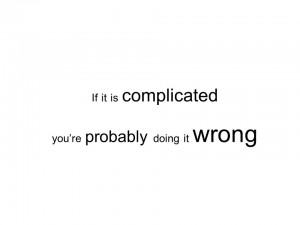

NServiceBus follows a simple design rule which stands above all:

Before you ever doubt the way NServiceBus behaves, always remember this rule. You might be doing something wrong.

When we visit nservicebus.com we are immediately presented with key arguments about the product itself. If you look closer at the key arguments we can spot four points which are most important.

NServiceBus claims to provide reliable integration with automatic retries which seems to make it more reliable than regular WCF or HTTP style service communication. NServiceBus categorizes itself as an open-source service bus. The main communication pattern behind NServiceBus is a pattern called “publish and subscribe” and long-running workflows or processes can be durably stored with NServiceBus. We are going to cover all these topics in this presentation.

Let’s demystify the “service bus” as key word.

The bus is an architectural style. An architectural style is according to Roy Fieldings thesis in the year 2000 a coordinated set of architectural constraints that restricts the roles and features of architectural elements and allowed relationships amongst those elements within any architecture that conforms to that style. Other examples for architectural styles are REpresentational State Transfer (aka REST). An architectural style is strictly explicit about what is and what isn’t allowed in an architecture, but never detailed enough to be an architecture itself.

The bus architectural style is pretty difficult to draw on a paper. Often it is drawn as a centralized line or pipe. This representation is misleading. In bus architecture there is no physical Ethernet box that things connect to remotely – the bus is physically everywhere. Event sources and sinks communicate via channels in the bus and sources places events in channels upon which sinks are notified about their availability.

In contrast to the widely used broker architectural style the bus does not centralize routing, data transformation or orchestration. With that approach it doesn’t embody the 11th fallacy of distributed systems which says that it is a common fallacy to believe business logic can and should be centralized. Having no centralized point, the bus architectural style has no single point of failure and therefore, it is easier to achieve stability and sustainability at larger scales.

The bus architecture style doesn’t imply physically separate channels. Channels can be both physical and logical. It is perfectly valid to deploy all sources and sinks physically on the same machine therefore having multiple logical channels on the same physical machine.

The communication in the bus is always dispersed over multiple channels therefore decoupling sources and sinks from each other.

A bus is usually much simpler than a classical broker style architecture. Buses do not have routing or service fail over capabilities. This must be achieved by installing robust and clustered hardware infrastructure. Also a bus does not analyze the message content and therefore does not provide any routing capability based on message content.

But everything comes with a price. Despite the great advantages the bus architectural style offers there is also a disadvantage. It is often more difficult to design a distributed solution with a bus architecture style than having a centralized broker style architecture. A true SOA Architectural style will most likely be based on the Bus Architectural Style because a Bus doesn’t break service autonomy. We’ll discover later what this means.

But how do we deploy sources and sinks to the bus architecture? Don’t worry. NServiceBus makes it very easy to get on the bus. Enjoy the ride with the demo:

https://github.com/danielmarbach/nservicebus.introduction/tree/ServiceBus

But what makes NServiceBus reliable or why do we even need reliability?

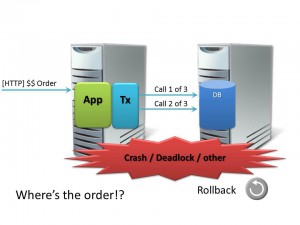

Consider a classical application approach where clients invoke remote procedure calls on the server. Now, what happens to the initiating request when a crash occurs? For example when the IIS App pool recycles or a connection has been refused by the remote host when too many transactions are waiting to time out. The initiating request is lost or if you are lucky somewhere present as cryptic information in a log file.

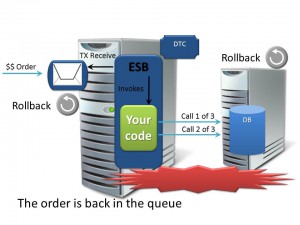

NServiceBus uses the well-known asynchronous fire & forget messaging pattern to solve these kinds of issues. Important to note is that simple in-memory messaging cannot solve these kinds of scenarios. We need durable messaging.

The messaging approach inverts the transaction management. The transaction is opened before a message is received from the queue. The service bus invokes the code which handles a certain message type. The executing code is also enclosed in the transaction which spans the messaging infrastructure, the executing code and finally the database which contains the business relevant data. If the processing fails at any time the transaction is rolled back and the message (hence the business intent) is put back into the messaging infrastructure.

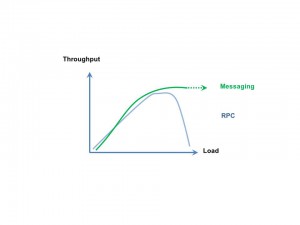

But what makes this approach more reliable and performant than classic remote procedure style communication?

In the beginning, RPC style communication seems to be better performing than messaging. But when the load on the systems increases and no more threads are available the RPC performance decreases. One cause of the RPC performance decrease is the need to acquire threads from the thread pool and to allocate memory for the parameters of each request. A messaging infrastructure can deterministically assign a number of threads to handle incoming messages. Message consumers can be horizontally scaled out if the load increases. Of course the infrastructure must be provisioned with enough hardware so that the message queue for the incoming requests does not explode.

Message receivers enclose the message consummation in a transaction but only when they intent to consume a certain message. In RPC communication, transactions are opened after the data has been received. When load increases and the infrastructure spends more time allocating and waiting for available resources, transactions are held longer than in messaging communication.

Let’s do a demo:

https://github.com/danielmarbach/nservicebus.introduction/tree/Reliability

But what about publish and subscribe? Let’s dive into it.

Wait, why are we talking about publish and subscribe here? Let’s assume once again that we have a classical RPC style architecture.

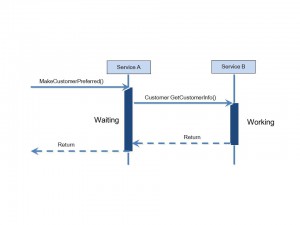

When some service named “A” issues a synchronous call (i.e. MakeCustomerPreferred) to a service named “B” the processing time of the service “B” directly affects that of service “A”. This means we have a temporal coupling between services “A” and “B”. Besides that, service “A” is also spatially coupled to service “B” meaning that the service “A” is coupled to the physical or logical location of the service “B”. Let us ignore the spatial coupling and search for a solution how to overcome the temporal coupling. Any ideas?

A simple approach would be to dispatch a thread which fetches the customer information from service “B”. But this wouldn’t solve the problem because the calling thread needs to wait for the result and therefore resources are held while waiting. Polling isn’t a good approach, too. Resources would be held while waiting. And there would be an increased load on service “B” per consumer depending on the polling interval. We need to separate the inter-service communication in time.

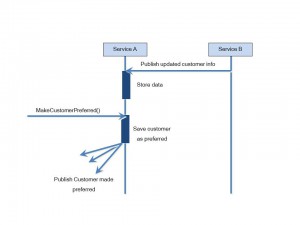

Great. We solved the temporal coupling aspects by applying publish and subscribe. But now service “A” makes its decisions based on stale data. But this is actually great; the chosen architecture enforces discussions with the customer. Can you live with stale data? How old can it be? What happens if stale data is detected? And if the business tells you that publish and subscribe doesn’t work, then request and response is also not possible because there could also be stale data! Data is always stale as soon as you acquire it. Just live with it!

Some important constraints must be fulfilled to successfully apply publish and subscribe.

Subscribers must be able to make decisions based on somewhat stale data. But publish and subscribe should not be applied blindly everywhere. Data which needs to be consistent should reside inside service boundaries. If data, which requires high consistency, is spread over service boundaries a lot of communication (with events) is necessary to keep it fresh. This embodies again some of the fallacies of distributed systems (i.e. Bandwidth isn’t a problem, Latency isn’t a problem, Transport cost isn’t a problem…).

It is important to have a strong division of responsibility between publishers and subscribers. The business process should clearly define which service acts as a publisher of a certain business event (i.e. CustomerMadePreferred) and which services should react on events being published.

Like in real life there can only be one logical publisher of a given kind of event. There can be multiple physical processes which publish any given kind of event but all these processes can be clearly assigned to a specific business authority.

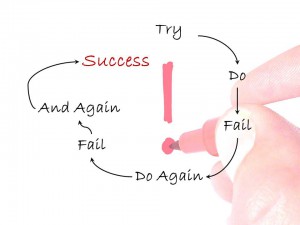

In order to get the benefits of increased parallelism and better fault-tolerance you must assume that messages may arrive out of order in services. Let us consider the following scenario. Three parties are involved: namely sales, billing and shipping. Sales publishes an order accepted event which is subscribed by the billing and shipping. Billing will bill the order and as soon as the bill is covered it will raise an order billed event. Shipping will create shipping information as soon as an order gets accepted. Imagine a race condition where the order billed event arrives before the order accepted event in shipping. In this case shipping knows nothing about that particular order. Retrieving of shipping information correlated by the order identification will fail with an exception. But that is perfectly fine! Just consider retrying messages later in those cases. This is usually a huge mind shift. By relying on retrying mechanism and the fact that messages may arrive out of order you also expect exceptions to be happening during processing.

We talked a lot about the term service. But what does it mean and what is a business authority?

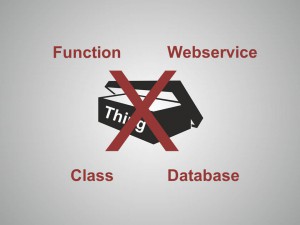

Unfortunately the term service is pretty overloaded these days. That’s why we have to define what a service is not. A service that has only functionality like a check if an order is valid is a function and not a service. Also when talking about services in the software industry people immediately think about web services. In SOA the term service has nothing to do with a web service. It is neither a class but more an autonomous bunch of components which belong together regarding business functionality. Nor is it a something, which has only operations like create, read, update and delete. That is a database and also not a service.

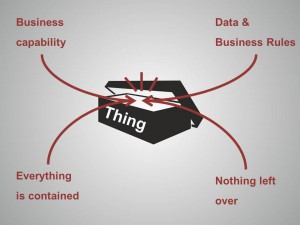

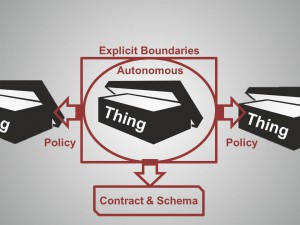

The advice I can give is that every time you hear the term service in SOA related topics just replace it with the word “thing”. Let us describe the characteristics of this “thing” in the SOA world:

The “thing” is the technical authority for a specific business capability. For example if we talk about a sales “thing” then all data which belongs to the sales business capability remains within the “thing” itself. This holds also true for all business rules attached to this business capability. After we have identified all “things” in our business domain, nothing is left over in our business domain but clearly identified to which “thing” it belongs. So everything is contained within a “thing”.

There are four tenets describing real service orientation – or shall we describe it as “thing” orientation? A “thing” needs to be autonomous meaning that it cannot rely on anything else in order to be operational. It also needs to have explicit boundaries. It must be crystal clear what belongs to it and what doesn’t. It only shares contract and schema but not classes or types between others. Therefore none of its behaviors can be used outside of it but only their effects can be observed. If interaction is necessary between “things” this has to be controlled by policies. Policies aka Sagas are able to survive restarts and are built for long running operations which can cope with the other party being not available.

Before we dive deeper into policies let’s see some publish and subscribe code!

Let’s do a demo:

https://github.com/danielmarbach/nservicebus.introduction/tree/PublishSubscribe

But what about complex business processes, which need interactions with several parties and can be long running?

To be able to clearly define what a long running process is we need first to talk about the term “process”. A process can be described as a set of activities that are performed in a certain sequence as a result of internal and external triggers (messages). An example for a basic process control is an “if-then”. More complex processes can include state machines. A long running process is a process whose execution lifetime exceeds the time to process a single external event or message. A process becomes long running when it handles multiple external events/triggers. Such a process needs to be stateful and its state needs to survive system restarts. Long running processes are therefore needed to provide a state management facility that enables a system to encapsulate the logic and data for handling an external stream of events. Such processes where previously described under the term “policies” or also known as “sagas”.

But how does such a saga differ from a regular message handler we have seen previously? It has state, message handlers don’t! Let’s dive into more aspects of sagas and how they are managed.

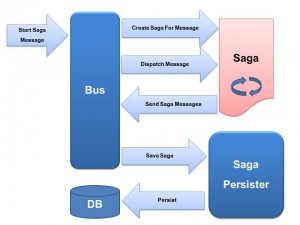

Sagas can be started by one or multiple messages. As soon as a message arrives and is identified as a starting message, a new instance of a saga is created and the message is dispatched onto that saga instance. The saga can interact with the message like a regular message handler but can save some state on its saga data. Right after consummation of the message the saga state is persisted into a persistent store. A started saga has a uniquely defined correlation identifier which is put on every message sent from the saga. This allows correlating back all incoming messages to the correct saga instance.

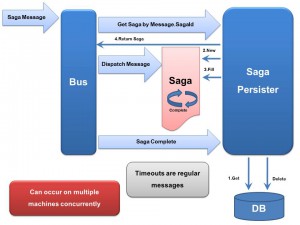

When a new saga message arrives the correct saga instance is retrieved from the persistent store and further messages are dispatched to the saga. Such a processing can occur on multiple machines! When a saga completes either by timing out or by successfully processing the saga, its state is removed from the persistent store. Saga uniqueness is ensured by the infrastructure so that the state of the system doesn’t get out of sync (see unique constraint of sagas in demo).

This all sounds pretty easy. The hardest part in sagas is analyzing the business processes to identify what the individual saga steps should be. Sagas can also be used to orchestrate legacy systems together.

Sagas should only be used inside service boundaries whenever possible. This can be achieved by dividing up workflows/orchestrations along services boundaries. Events are published at the end of the sub-flow in a service. Events trigger a sub-flow in other services.

If you adhere to this principle your sagas quickly become the domain model inside your service boundaries.

A good advice before starting with sagas is to try to decompose the system into services and if something sounds “orchestrationish” then decompose it into sagas. Sagas allow defining time bound business processes easily. Because they understand the concept of time and allow modeling it explicitly. Use the power of sagas to define time bound processes!

Let’s orchestrate:

https://github.com/danielmarbach/nservicebus.introduction/tree/Sagas

What customers say about NServiceBus…

You are excited to get started but are afraid of the license costs? Don’t worry NServiceBus has much to offer. Start free of charge and change the license model according to your needs. Out of the box NServiceBus is free for development use and only needs to be licensed in active production environments. Disaster recovery sites and testing environments do not need to be licensed! After that you can start with the basic license. It is limited to a single site or datacenter and throughputs from 2 to 32 messages/s. There is also the option of a monthly subscription license for higher performance with a flexible pay-as-you-go per core license model. Licensed per server core (or virtual core, whichever is lower), only servers which process messages require a license. Servers that only send messages (like web servers in many cases) do not need to be licensed. If you install your software on many machines and/or across many sites, the Royalty-free License is most appropriate. Licensed per-developer programming against the NServiceBus API (including the system’s messages), this license covers all machines and sites the software is deployed to and also has all performance-inhibiting limitations lifted. Get started! What are you waiting for!

Before ending this presentation I have to ask you the following question: After all you have seen in the past few hours, which pill do you take?

Special thanks to Udi Dahan, Samuel Camhi and NServiceBus Ltd. for supporting me. Some diagrams are copyrighted by NServiceBus Ltd. All images used in this presentation are copyrighted by www.sxc.hu.

[…] Introduction to NServiceBus – Daniel Marbach shares a presentation and notes looking at the key concepts and principles behind the use of NServiceBus. […]

Great tutorial, Daniel. Excellent work.

Just updated the blogpost to reflect some of the latest changes on the website and the licensing model