This is the presentation I gave at the .Net System Event by bbv Software Services AG in Lucerne in June 2012:

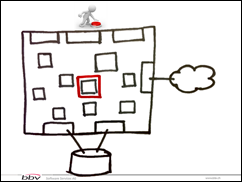

When we start a new project, everything is small, nice and easy.

New features are added rapidly, the system is easy to understand and change.

But far too often and far too quickly, systems become something like this:

The team isn’t happy about how the code is structured, everything is hard to change and nobody has the overview anymore. The system rots because no one in the team risks to clean things up because things may break at any place.

Furthermore, nobody knows exactly what features are really in the software and how they behave in detail anymore.

So what can we do to prevent this from happening?

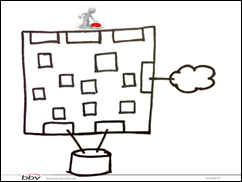

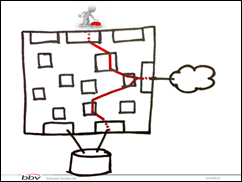

Let’s take a look at a typical system:

There is a user who interacts with the system (top), there are external services that the system is connected with (right) and there probably is a database persisting the system state (bottom).

To keep this system changeable, we use…

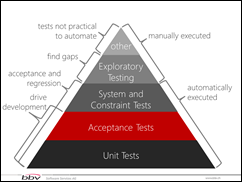

…Unit tests. Unit tests give us the possibility to refactor the code, clean things up and make them simple without breaking existing functionality.

A unit test checks a single class in the system – isolated from the rest. The unit test gives us a guarantee that when changing the internals of the class under test, the rest of the system is not compromised as long as the tests are passing. Therefore, we can keep the individual parts of the system from rotting.

With unit tests it’s easy to get lost in details. Therefore, we need something that gives us the big picture about our software system. Here acceptance tests come into play.

An acceptance test gives us feedback whether a feature is present and working inside our system. The sum of all acceptance tests gives us a list of all available features, which our system provides.

An acceptance test walks a single path through the system. It guarantees that the needed functionality is in the system and that the individual parts work together.

The red line is dotted at the system boundary because we use to replace all externals with fakes: the user interface, external systems, the file system and the database. This allows us to test special cases easily and to run these tests in a couple of seconds. Thus, providing quick feedback during development whether I did break an existing feature or not.

Of course, these two kinds of tests are not enough to deliver software with guaranteed quality.

But they are the fundament for further quality assurance measures and are the main driver of development by telling the developer whether the software works correctly (unit tests) and does the correct thing (acceptance tests).

There are additional automated tests – system and constraint tests – that we use for regression testing so we know that our software works at the end of each Scrum Sprint, every second week.

Manual tests are performed both to find gaps in the existing test suite and to test things that cannot be reasonably automated. But compared to unit and acceptance tests, the number of manual tests is very small.

But where do these acceptance tests come from?

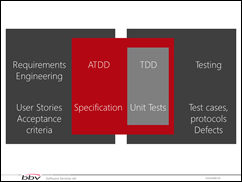

We work mainly with user stories to gather requirements. These user stories come with acceptance criteria that were discussed with the product owner and other business representatives. These acceptance criteria are translated into acceptance tests.

The acceptance tests form the frame for the real coding done with Test Driven Development.

Finally, testing takes all these artifacts and extends them with test cases, test protocols and probably defects.

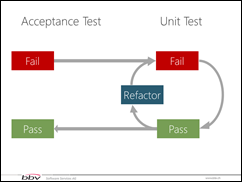

Acceptance Test Driven Development consists of the following steps.

First, we translate the acceptance criteria into an executable acceptance test. This test should fail because the functionality is not yet implemented. Then we implement this functionality with Test Driven Development: Write a failing unit test, make it pass, clean things up and start over.

At some point, all the code needed to fulfill the acceptance test is in place and the acceptance test passes.

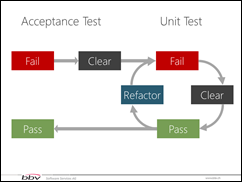

We extended this process a little by…

…adding steps to make the messages of the failing tests as clear as possible. This is important because once an existing test fails in the future, we want to find the cause quickly without the need to use the debugger.

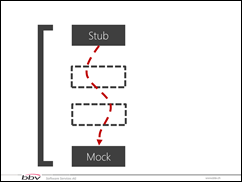

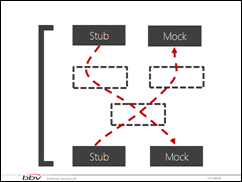

When writing an acceptance test, we specify how the functionality is invoked. For example by using a stub instead of the real service interface that we can call from the test code. And we define the effect that should be observed when the functionality was executed. For example by using a mock instead of a real database or external system.

This gives us the frame inside which we can develop the functionality.

Normally, things are a bit more complicated so that we have to introduce several stubs and mocks to provide the system with all external information that it needs and to check whether all operations were executed correctly.

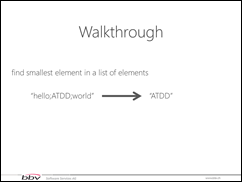

Now it’s time for a demo. Our job is to implement a service method that takes a semicolon separated list of strings and returns the alphabetically smallest element. Furthermore, each call to the method should be logged.

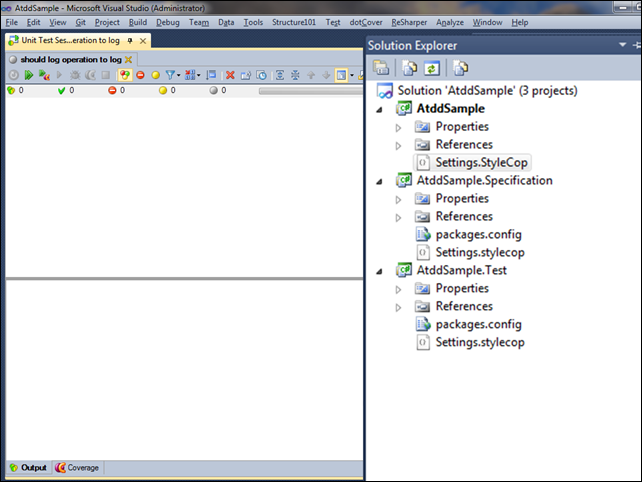

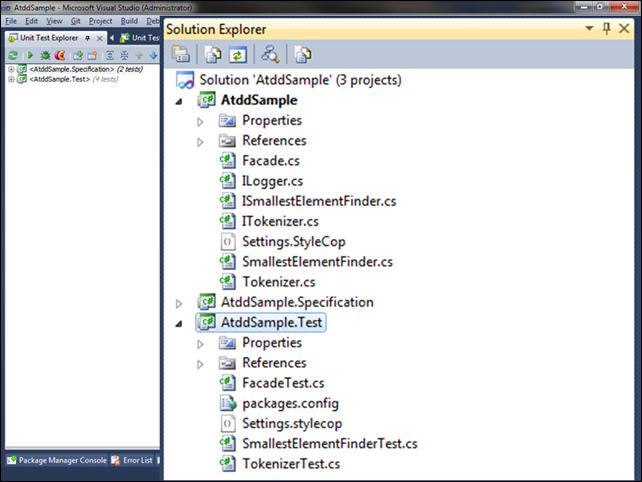

I’ve prepared a sample solution containing three projects. One project for the productive code, one for the acceptance tests also known as specifications and one for the unit tests.

I’ll use Machine.Specifications to write the acceptance tests/specifications.

Video: Writing specification

First, I translate the acceptance criteria into an executable specification or acceptance test. The Subject is used to group specifications belonging together.

Video: Run specification to see not yet implemented features

These specifications can be run and are shown as ignored, meaning that they are not yet implemented.

Video: Implement first part of specification

I define that the requested functionality should be provided by a method on a Facade. The Establish block is used to setup the acceptance test. The Because block executes the action to test. And the It blocks are used to check whether the functionality was executed correctly.

Video: Make specification runnable

In order to run the specification, I have to fix the compile errors by introducing a dummy Facade implementation.

Video: Let specification fail

When I run the specification, the acceptance criteria that it should return the smallest element fails, the logging acceptance criteria is still not yet implemented.

Now I can start implementing the required functionality using Test Driven Development.

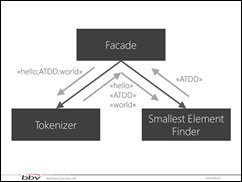

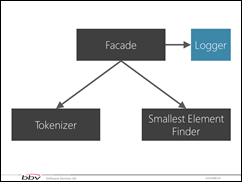

While implementing the functionality with Test Driven Development, this design came up. The façade uses the Tokenizer to split the list of values, passes them to the SmallestElementFinder, which returns the smallest element, and returns the result back to the caller.

The solution has grown by these types and their interfaces.

Video: Run unit tests

I run the unit tests of the three classes that were implemented.

Video: Update acceptance test to meet changed design

After updating the specification because the constructor of the Facade has changed, the specification for the result value passes. Note that the system internals are not mocked but the real implementations are used (Tokenizer, SmallestElementFinder).

Now it’s time to get the logging specification running.

Video: Introduce ILogger to abstract external dependency

First, I introduce the interface ILogger in order to abstract away system externals like log files.

Video: Introduce LoggerStub to simulate external dependency

Then, I implement a simple stub that is used to simulate the logger in the specification.

Video: Write logging specification

Now, the specification can be written. It states that there should be a log message containing the input and output values. It is not an exact match because I’m not interested in the details but whether there is a log at all. The unit tests deal with the details.

Video: Let specification fail

The logging specification fails because logging is not yet implemented.

Video: Extend Facade with ILogger

I extend the constructor of the Facade with the logger. Dependency injection is a simple way to make classes and whole systems easy to test.

The functionality is implemented using Test Driven Development. The design remains as specified in the acceptance test.

Video: Let acceptance test succeed

Finally, the acceptance test passes and I’m finished with the implementation.

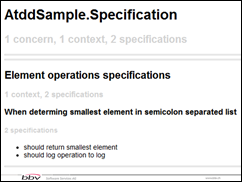

Machine.Specifications allows me to create a report containing all specifications. The report for this small example looks like this:

We use the report to discuss whether we implemented the correct functionality with our product owner and as a reference when we are not sure how the system behaves in certain situations.

The result of using Acceptance Test Driven Development and Test Driven Development is software, which remains changeable.

While unit tests enable the team to keep the code clean and simple by using refactoring, acceptance tests both describe and check the functionality of the system. The description helps us to make decisions consistent with the existing software. Using the acceptance tests as regression tests helps us to keep the software functional.

Therefore, the software can be pushed forward without the risk of breaking existing functionality.

So that in the end, our software looks more like this…

…than the ruin I’ve shown in the beginning.

Great as always, Urs! Congratulations… and thanks! There is still little about ATDD.

Some questions, :

(1) Is MSpec the BDD’s Fx you recommend?

(2) By replacing externals with real collaborators (dotted red line) instead fakes we would be testing the system completely. But it would then be an integration test (system test), isn’t?

(3) If I want test – for example in a MVP component – that the presenter calls view.ShowAsModal if view.ShowMode=Modal and view.ShowAsModeless if view.ShowMode=Modeless, would it be an acceptance test or unitary?

Thanks again!

Hi again,

Forget third question, Urs.

As is, is not a good example (neither a good question surely). I will try explain it better.

In a traditional application I have clear that you explain:

for example, from an acceptance test I would create real presenter/controller, a stub of view, real (surely) application service and a stub (or mock, for exception handling) repository. Isn’t?

Is in my current development (a little FX for MVP applications for WinForms) where I didn’t see so clear.

Let’s go with another example, now with the MVP’s FX.

One of my requirements is initialize views (forms) with a few of behaviors (font, style, caption, …) by default.

I thought related classes (not in detail, aka, BUDF, only for grasp the big picture) and appear me Configuration, IViewInitializingStrategy (for

custom initializations), …

Then I could have “only” two acceptance tests:

1/ Create A FORM, customize it (font, style, caption), wrapped it a view, set AutoInitialize=True, start the presenter and asserts that form/view has changed (their font, style, caption, …)

2/ Create A FORM, customize it (font, style, caption) bla, bla, bla… but set AutoInitialize=False, start the presenter and asserts that form/view has NOT changed, maintaining their font, style, caption, …

No Configuration, no IViewInitializingStrategy… only two (acceptance) tests, and a form, a view and a presenter (or so).

But these acceptance tests will create a set of unit tests:

1/ Several with Configuration as SUT

2/ Two with Configuration as mock (that presenter calls configuration passing the view as parameter when view’s AutoInitialize is True and not

when AutoInitialize is False)

3/ Etc.

Would that, right?

In any case sorry for my initial third question. maybe I should have explained so, with more detail…

@JC

Hi JC

1) I use MSpec in my projects (open source and commercial). But there are other frameworks that may be better suited depending on your project set-up (technology, people). For example SpecFlow if you want to take advantage of the Gherkin way of specification and really have a business representative who writes them. Or frameworks dedicated for web applications.

2) Yes, when using the real collaborators instead of the fakes, you’ll get a system test. The reason why we use fakes is to make the tests faster, get the possibility to test edge cases and to implement our system while the dependency is not yet available.

3) I don’t know MVC, therefore I don’t know what AutoInitialize does 🙁

But as you write, there exists an acceptance test for each micro feature. The error handling and special cases are left to the unit tests. There are about 10 times more unit tests than acceptance tests.

In acceptance tests, you should use fakes only for instances at the boundary of your system; while in unit tests, all dependencies are fakes to get complete isolation from the environment.

[…] I miss is a wider look at approaches to ATDD than the ones presented: Cucumber and Fitness. See here for another, more developer-centric approach providing better refactoring […]

Hi,

I like that explanation of ATDD.

Maybe you can help me out of some points which are still unclear for me:

1. Is there a special reason for starting or entering the system via a facade?

2. Do you have any advice for testing e.g. a cash register display? I am struggling around with implementing a display stub with some getter methods wheras those methods are only needed for testing.

Thank’s in advance

Testing against a facade has the advantage that you can change the implementation without changing the tests. This makes refactoring a lot easier ans safer.

Additionally, a facade can be used to name operations in a way that is easy to understand (name the methods on the facade in a way that matches the level of abstraction of the facade).

Regarding your second question: do you know „clean architecture“?

In a clean architecture all UI stuff is in a layer that can be replaced in tests by the tests themselves. The result is that you can test the business logic without the UI. Just google the term „clean architecture„

Hope that helps.

Urs