This blogpost is part of a larger blog post series. Get the overview of the content here.

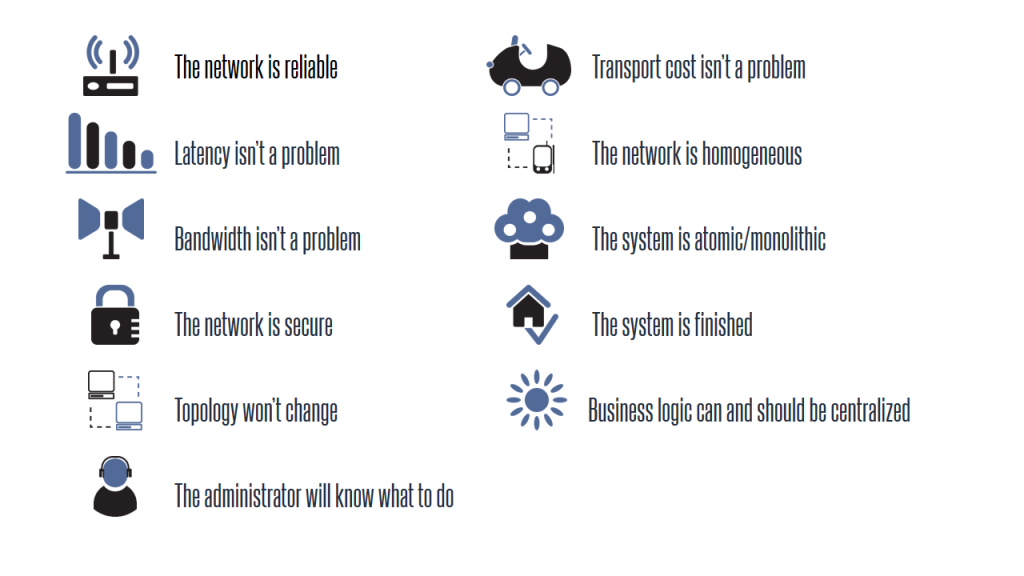

The fallacies of distributed computing describe common assumptions that are made by developers and architects in distributed systems. In 1994 Peter Deutsch wrote down seven fallacies. In 1997 James Gosling added another fallacy. These fallacies are known as “The eight fallacies of distributed computing”. In the year 2006 Ted Neward added three more fallacies which are not so widely known.

Let us depict the first fallacy.

The code above completely hides the fact that when calling the second line of code exceptions such as timeouts and more can occur. The network can’t be assumed reliable. Hardware or software defects or even security problems make the network unreliable. Furthermore ask yourself what happens in the code above to the request when the method “process” throws an exception? Exactly the initiating request is lost in that case. Data can also get lost when sent over the wire. The situation gets even more complicated if you collaborate with an external partner. Their side of the connection is not under your direct control.

It is important to do a risk analysis first before you choose any solution. Especially consider the question what is the risk of failure versus the investment you have to do in order to make it more reliable. From the hardware and software perspective you can introduce redundancy. From the software perspective it is possible to use reliable messaging instead of doing classic Remote Procedure calls. If you don’t have a reliable messaging infrastructure at hand you can use retry or acknowledgement mechanisms and make your messages idempotent.

Latency is how much time it takes for data to move from one place to another (versus bandwidth which is how much data we can transfer during that time). Latency is a problem! It takes time to cross the network in one direction. The time can be small for a LAN but large for WAN & internet connections. Network connections are many times slower than in-memory access. Do you remember the dawn days of the good old remote objects? Even accessing a property of a remote object caused a round-trip to the server. Nowadays we use data transfer objects. But what happens if we lazy load data with an ORM?

Every network call comes with latency.

A general rule of thumb is: Don’t cross the network if you don’t have to. Inter-object chit-chat should never cross the network. This is very expensive. If you have to cross the network, take all the data you might need with you.

Although bandwidth keeps growing the amount of data we need to transfer grows faster. When we transfer lots of data in a given period of time network congestion may interfere and the bandwidth dramatically decreases in such cases. Another major player which can reduce bandwidth can be ORMs eagerly fetching too much data.

You have to balance bandwidth versus latency. As advised before you should take with you all the data you need to reduce latency but this contradicts the bandwidth fallacy. As usual it is all about the right tradeoffs for your business scenarios. For example you can tune your used ORM to eager fetch data where necessary or switch to lazy loading where appropriate. Finally if you have really time critical data separate it out to different networks or apply Quality of Service to your network management.

The next post will cover even more about fallacies.

[…] Fallacies of Distributed Systems Part I […]

RT @planetgeekch: Composite UI for Service Oriented Systems – Fallacies Part I http://t.co/a56OlH3MbC

[…] Composite UI for Service Oriented Systems – Fallacies Part I |. […]

[…] talked a lot on this blog about the fallacies of distributed computing and how messaging addresses some of the fallacies. But messaging does more, it helps to decouple […]