This blogpost is part of a larger blog post series. Get the overview of the content here.

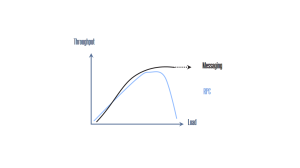

In the beginning, RPC style communication seems to be better performing than messaging. But when the load on the systems increases and no more threads are available the RPC performance decreases. One cause of the RPC performance decrease is the need to acquire threads from the thread pool and to allocate memory for the parameters of each request. A messaging infrastructure can deterministically assign a number of threads to handle incoming messages. Message consumers can be horizontally scaled out if the load increases. Of course the infrastructure must be provisioned with enough hardware so that the message queue for the incoming requests does not explode.

Message receivers enclose the message consummation in a transaction but only when they intent to consume a certain message. In RPC communication, transactions are opened after the data has been received. When load increases and the infrastructure spends more time allocating and waiting for available resources, transactions are held longer than in messaging communication.

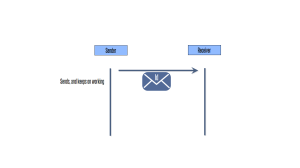

Real asynchronous messaging is all about one-way, fire & forget messaging. All messaging patterns we cover here are built on top of it.

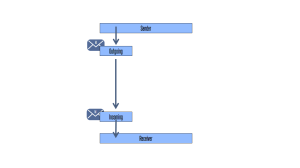

One-way, fire & forget messaging is pretty simple. Each message has a message id assigned by the sender. The sender makes sure that when he sends the same information twice that he assigns the same message id. When the sender sent his message to the receiver he carries on with his work. The fact that every message has its unique id assigned allows the receiver to deduplicate messages. If our messaging infrastructure uses the so called store & forward messaging pattern (often referred as bus architecture style) we get resilience out of the box.

A sender first looks into his subscription store whether there is any interested receiver registered. If there is a subscription present, the sender first stores the message into his local outgoing queue. The messages remains in the outgoing queue as long as the remote machine is not available. As soon as the receiver machine is available the message gets transferred to the ingoing queue of the receiver. When the receiver process is not online, the message remains in the ingoing queue. Finally when the receiver is online it gets picked from the ingoing queue and processed.

Messaging furthermore allows us to introduce fault-tolerance. Possible scenarios are when the server crashes, databases are down or deadlock occur in the database. We discuss this in the next blog post.

[…] Messaging vs RPC […]